Автор: Лука Барес (Luca Bares) – старший SEO-аналитик, Wayfair.

Показатель кликабельности (CTR) – это важная метрика, которую можно использовать в разных целях – начиная от оценки возможностей заработка и приоритизации ключевых слов и заканчивая оценкой влияния изменений в SERP на рынок.

Многие SEO-специалисты строят собственные кривые CTR для сайтов, чтобы сделать эти прогнозы ещё более точными. Однако если эти кривые строятся на основе сведений из Google Search Console, то всё не так просто.

GSC, как известно, является несовершенным инструментом, который может выдавать неточные данные. Это запутывает сведения, которые мы получаем из GSC, и может затруднить точную интерпретацию кривых CTR, которые мы создаем с помощью этого инструмента. К счастью, которые помогают устранить эти неточности, чтобы вы могли гораздо лучше понимать, что говорят ваши данные.

Если вы тщательно очистите имеющиеся данные и основательно продумаете методологию анализа, то сможете гораздо точнее рассчитать CTR для своего сайта. Для этого нужно будет выполнить 4 шага:

- Извлеките данные по ключевым словам для своих сайтов из GSC – чем больше данных вы получите, тем лучше.

- Удалите ключевые слова, которые могут искажать общую картину. Брендовые поисковые запросы могут вызывать смещения в кривых CTR, поэтому их следует убрать.

- Найдите оптимальный уровень показов для вашего набора данных - Google сэмплирует данные на низких уровнях показов, поэтому важно удалить ключевые слова, по которым Google может предоставлять неточные данные на этих более низких уровнях.

- Выберите свою методологию определения позиций в ранжировании – ни один набор данных не является идеальным, поэтому вы можете изменить методологию классификации рангов в зависимости от размера выборки ключевых слов.

Небольшое отступление

Прежде чем приступить к особенностям построения кривых CTR, важно упомянуть о простейшем способе вычисления показателя кликабельности, который также будет использоваться в статье.

Чтобы рассчитать CTR, выгрузите ключевые слова, по которым ранжируется ваш сайт, вместе с данными по кликам, показам и позиции.

Затем возьмите сумму кликов, разделённую на сумму показов на каждом уровне ранга из данных GSC, и вы получите собственную кривую CTR. Для получения более подробной информации о фактическом сокращении чисел для кривых CTR вы можете ознакомиться со статьей от SEER.

Этот расчёт становится довольно сложной задачей, когда вы начинаете пытаться контролировать смещение, которое изначально присуще данным по CTR. Однако, хотя мы знаем, что этот подход даёт неверные данные, у нас на самом деле немного других вариантов, поэтому единственный выход – попытаться по максимуму устранить смещения в нашем наборе данных и знать о некоторых проблемах, возникающих при их использовании.

Без контроля и работы с данными, взятыми из GSC, вы можете получить результаты, которые будут нелогичными. Например, вы можете обнаружить, что согласно вашим кривым, на позициях 2 и 3 средние значения показателя CTR намного выше, чем на позиции 1.

Если вы не знаете, что данные, которые вы используете из Search Console, могут быть неточными, вы можете принять их за правду и а) попытаться выдвинуть гипотезы о том, почему кривые CTR выглядят таким образом, основываясь на неверных данных, b) вывести неточные оценки и прогнозы на основе этих кривых CTR.

Шаг 1. Извлеките данные

Первая часть любого анализа – это получение данных.

- Google Search Console

Search Console – это самая простая платформа с точки зрения получения тех данных, которые собирает сам Google. Вы можете войти в сервис и экспортировать все данные по ключевым словам за последние 3 месяца. Google автоматически выгрузит их в файл в формате CSV.

Недостатком этого метода является тот факт, что GSC экспортирует только 1000 ключевых слов за раз, что делает вашу выборку слишком маленькой для анализа. Это ограничение можно обойти, используя фильтр по ключевым словам для основных запросов, по которым вы ранжируетесь, и скачивания нескольких файлов. Но это довольно трудоёмкий процесс. Методы, перечисленные ниже, – лучше и проще.

- Google Data Studio

Для всех, кто не является программистом, это, безусловно, самый лучший вариант. Data Studio (в русскоязычной версии – «Центр данных») подключается напрямую к учётной записи GSC, при этом никаких ограничений на объём скачиваемых данных нет. За тот же трёхмесячный период с помощью этого инструмента можно получить 200 тыс. ключевых слов (!) вместо 1000, если делать это в GSC.

- Google Search Console API

Один из лучших способов получить нужные данные – это подключиться напрямую к источнику, используя его API.

При использовании этого метода у вас будет гораздо больше контроля над данными, которые вы извлекаете, и вы получите довольно большой набор данных.

Основным недостатком здесь является то, что для этого вам нужны будут знания и ресурсы по программированию.

Примечание. Инструменты в этом разделе перечислены по объёму тех данных, которые можно получить с их помощью – по убыванию.

Шаг 2. Удалите ключевые слова, которые могут искажать общую картину

После того, как вы извлечёте данные, их нужно будет очистить.

- Удалите брендовые ключевые слова

Когда вы создаёте общие кривые CTR, важно удалить все брендовые ключевые слова. У этих фраз обычно высокий CTR, что приводит к смещению средних значений по выборке, поэтому они должны быть удалены.

- Удалите ключевые слова, связанные с поисковыми функциями

Если вы знаете, что по определённым запросам ваш сайт регулярно ранжируется в каких-то поисковых функциях, таких как панели знаний, то их тоже нужно удалить. Причина в том, что мы рассчитываем CTR для позиций 1-10, а поисковые функции могут сдвигать средние значения.

Шаг 3. Определите оптимальный уровень показов в GSC для своих данных

Наибольшие отклонения, вызываемые данными из GSC, связаны с тем, что сервис включает в выборку данные по минимальным показам, которые необходимо удалить.

По какой-то причине Google значительно переоценивает CTR для запросов с малым количеством показов. Например, вот график распределения показов, который мы сделали с учётом данных из GSC по ключевым словам, которые имеют только 1 показ и CTR для каждой позиции.

Согласно этому графику, большинство ключевых слов, получивших только 1 показ, имеют CTR на уровне 100%. При этом крайне маловероятно, что ключевые слова с одним показом получат такой CTR. Это особенно верно для ключевых слов с позицией ниже #1. Это даёт нам достаточно веские доказательства того, что данным по низкому уровню показов нельзя доверять, и мы должны ограничить количество таких ключевых слов в нашей выборке.

3.1. Используйте кривые нормального распределения для расчёта CTR

Чтобы ещё больше убедиться в том, что Google предоставляет искажённые данные, давайте посмотрим на распределение CTR по всем ключевым словам в нашем наборе данных.

Поскольку мы рассчитываем средние значения CTR, данные должны соответствовать кривой нормального распределения (кривой Белла). Однако в большинстве случаев кривые CTR с данными из GSC сильно отклонены влево с длинными хвостами, что снова указывает на то, что Google сообщает об очень высоком CTR при низких объёмах показов.

Если мы изменим минимальное количество показов для наборов ключевых слов, которые мы анализируем, то в конечном итоге мы будет становиться всё ближе и ближе к центру графика. Ниже приведён пример распределения CTR по сайту с шагом 0,001.

Приведённый выше график показывает количество показов на очень низком уровне, около 25. Распределение данных находится в основном на правой стороне этого графика. При этом небольшая высокая концентрация слева означает, что этот сайт имеет очень высокий CTR. Однако, увеличив уровень показов по ключевому слову до 5000, распределение ключевых слов станет намного ближе к центру.

Этот график, скорее всего, никогда не будет центрирован около CTR на уровне 50%, потому что это очень высокий средний показатель. Поэтому график должен быть смещён влево. Основная проблема в том, что мы не знаем, насколько, потому что Google даёт нам выборочные данные. Лучшее, что мы можем сделать, это попробовать угадать. Но возникает вопрос: каков правильный уровень показов для фильтрации ключевых слов, чтобы избавиться от ошибочных данных?

Один из способов найти правильный уровень показов для создания кривых CTR – использовать описанный выше метод, чтобы понять, когда распределение CTR приближается к нормальному. Нормально распределённый набор данных по CTR имеет меньше всплесков и с меньшей вероятностью содержит большое количество искажённых фрагментов данных от Google.

3.2. Определите наилучший уровень показов для расчёта CTR по сайту

Вы также можете создавать уровни показов, чтобы видеть, где меньше расброс анализируемых данных, вместо кривых нормального распределения. Чем меньше расброс в ваших оценках, тем ближе вы подходите к точной кривой CTR.

- Многоуровневый CTR

Многоуровневый CTR должен рассчитываться для каждого сайта, потому что выборка из GSC для каждого ресурса отличается в зависимости от ключевых слов, по которым он ранжируется.

Например, мы видели ситуации, когда кривые CTR отклонялись на 30% без должного контроля над расчётом этого показателя. Этот шаг важен, потому что использование всех точек данных в расчёте CTR может сильно сместить ваши результаты. А использование слишком малого количества точек данных даёт вам слишком маленький размер выборки, чтобы получить точное представление о том, каков ваш CTR. Ключ в том, чтобы найти золотую середину между ними.

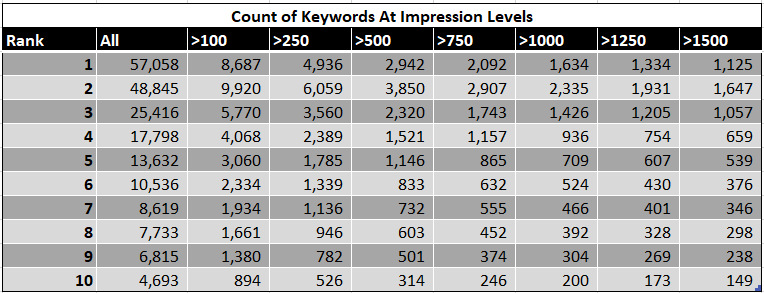

В приведённой выше многоуровневой таблице есть выраженная вариабельность на промежутке от всех показов до >250 показов. Однако после этой отметки разница между уровнями небольшая.

Для анализируемого сайта правильный уровень показов - более 750, поскольку начиная с него, различия между уровнями довольно малы. При этом уровень >750 показов всё ещё даёт нам достаточное количество ключевых слов на каждом уровне ранжирования нашего набора данных.

При создании многоуровневых кривых CTR важно также подсчитать, сколько данных используется для построения каждой точки данных на всех уровнях.

В случае небольших сайтов вы можете обнаружить, что у вас недостаточно данных для надёжного расчёта кривых CTR, но это не будет очевидным, если только смотреть на многоуровневые кривые. Поэтому важно знать, каким объёмом данных вы располагаете на каждом этапе, чтобы понять, какой уровень показов является наиболее точным для вашего сайта.

Шаг 4. Определите, какую методологию определения позиции вы будете использовать

После того, как вы определите правильный уровень показов, вам нужно будет отфильтровать данные, чтобы можно было приступить к расчёту кривых CTR с использованием статистики по показам, кликам и позиции.

Проблема с данными по позиции заключается в том, что они часто неточные. Поэтому, если у вас есть эффективный инструмент для отслеживания ключевых слов, гораздо лучше использовать ваши собственные данные вместо данных Google. Большинство людей не могут отследить так много позиций, поэтому им приходится использовать данные Google. Это, конечно, допустимо, но при этом важно сохранять осторожность.

Как использовать данные по позиции из GSC

При расчёте кривых CTR с использованием данных о средней позиции из GSC возникает следующий вопрос: какие цифры использовать – округлённые или точные?

Использование точных цифр позволяет нам получить наилучшее представление о том, какой CTR у первой позиции. Ключевые слова с точной позицией с большей вероятностью ранжировались на этой позиции в тот период времени, за который были собраны данные. Проблема в том, что средняя позиция – это средний показатель, поэтому у нас нет возможности узнать, действительно ли ключевое слово ранжировалось именно на этой позиции всё время или же только частично.

К счастью, если мы сравниваем CTR по точным и округлённым позициям, то они схожи с точки зрения оценочных значений CTR, основанных на достаточном количестве данных. Однако если у вас недостаточно данных, то средняя позиция может колебаться. Используя округлённые позиции, мы получаем намного больше данных, поэтому имеет смысл использовать эти цифры, если у вас недостаточно данных по точным позициям.

При этом есть одна оговорка, которая касается оценки CTR на первой позиции. Для неё лучше использовать точное значение позиции, а не округлённое - чтобы избежать возможного занижения CTR.

Скорректированная точная позиция

Поэтому, если у вас достаточно данных, для 1-й позиции используйте только точные цифры по позиции. Для более мелких сайтов можно использовать скорректированную точную позицию.

Поскольку Google предоставляет в среднем до двух десятичных знаков, один из способов получить «более точную позицию» #1 – это включить все ключевые слова, которые имеют рейтинг ниже позиции 1.1. В результате вы получите пару сотен дополнительных ключевых слов, что сделает ваши данные более надёжными.

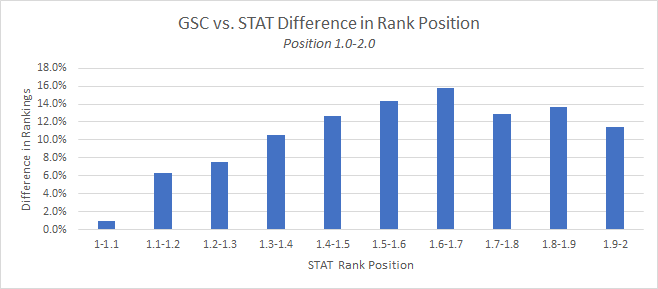

Это также не должно сильно снижать наш средний показатель, поскольку GSC несколько неточно оценивает среднюю позицию. В Wayfair мы используем STAT в качестве инструмента отслеживания позиций ключевых слов, и после сравнения разницы между данными по средней из GSC и STAT, рейтинги рядом с первой позицией близки, но не на 100% точны. Как только вы начнёте опускаться ниже, разница между STAT и GSC станет больше, так что следите за тем, как далеко от ТОПа выдачи вы находитесь, чтобы включить больше ключевых слов в набор данных.

Мы провели этот анализ для всех позиций, отслеживаемых по Wayfair, и обнаружили, что чем ниже позиция, тем менее близки совпадения между этимя двумя инструментами. Таким образом, Google не предоставляет очень качественных данных по ранжированию, но его данные достаточно точны ближе к 1-й позиции. Поэтому нам удобно использовать скорректированную точную позицию, чтобы увеличить набор данных, не беспокоясь о потерях качества (в разумных пределах).

Заключение

GSC – это несовершенный инструмент, но он даёт SEO-специалистам наилучшую информацию для понимания эффективности сайта с точки зрения CTR в поисковой выдаче.

Учитывая ограничения инструмента, важно контролировать максимальное количество предоставляемых им данных. Для этого нужно выбрать свой идеальный источник для извлечения данных, удалить ключевые слова с малым количеством показов и использовать правильные методы округления. Если вы будете всё это делать, у вас будет намного больше шансов получить более точные и согласованные кривые для CTR по сайту.