Советы начинающим SEO-специалистам

О SEO можно сказать одной фразой: «Мощный инструмент маркетинга, но только если не ошибаться». Действительно, поисковая оптимизация — сложный многоуровневый процесс, призванный не только привлекать трафик на сайт, но и в целом работать над улучшением продукта и выстраиванием взаимоотношений с клиентами. Не допустить промах и выбрать правильную стратегию оптимизации довольно сложно, особенно, если впервые занялся самостоятельным продвижением своего проекта.

Специалисты сервиса Rookee провели анализ основных ошибок при продвижении сайтов и показали на практических примерах, как их исправить.

Итак, 20 критических ошибок SEO и пути их исправления:

1. Ошибка: игнорирование robots.txt

Распространенная ошибка, которая мешает продвижению сайта.

Robots.txt — это текстовый файл, который расположен в корневой системе сайта. Это свод правил, который содержит параметры индексирования сайта для роботов поисковых систем. Проще говоря, диктует поисковикам, какие страницы сайта им индексировать, а какие нет.

Что предпочтительно закрывать от поисковых систем:

- точки входа платных каналов (utm-метки и т. п.);

- результаты работы функционалов (поиск, фильтр, отображения товаров, печати и т. п.);

- дубли главной страницы сайта;

- иностранные версии сайта (если они дублируют контент на основном сайте);

- файлы pdf, doc, xls (если контент из них дублируется на основных);

- служебные страницы (корзина, личный кабинет, регистрация, авторизация).

Как проверить:

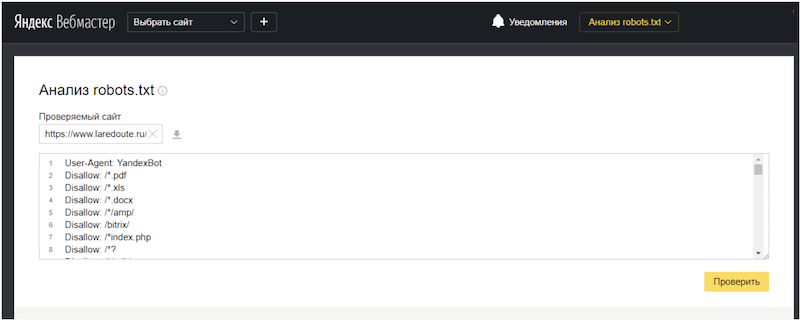

В сервисе Яндекс Вебмастер можно проверить, закрыта ли страница от индексации, не регистрируясь в нем.

Как закрывать:

К примеру, функционал поиска может генерировать огромное количество дублей на сайте.

Предположим, что адреса ссылок в функционале поиска могут выглядеть вот так:

- Site.ru/search/?search.text=мужские-джинсы

- Site.ru/search/?search.text=женские-джинсы

- Site.ru/search/?search.text=горячие-беляши

У всех вышеприведенных ссылок есть общий лем (слово):

/search

Чтобы закрыть страницу, необходимо использовать директиву:

Disallow: /search

Disallow — запрещает индексирование разделов или отдельных страниц сайта.

Disallow: /search — страница поиска. Эта строка говорит поисковым роботам, что искать в результатах поиска не нужно.

Далее мы проверяем и формируем в Яндекс Вебмастере новый корректный файл Robots.txt, копируем его и размещаем в текстовом файле в корневой директории сайта.

Подобным образом можно проверить и закрыть от индексации все возможные варианты дублирования страниц, которые мы привели выше.

2. Не осуществляется работа с потенциальными дублями страниц

Разберем, как искать дубли на сайте, если они не очевидны и не входят в вышеперечисленный список из первого пункта. К примеру, из-за особенностей CMS сайт может самостоятельно генерировать дубли страниц.

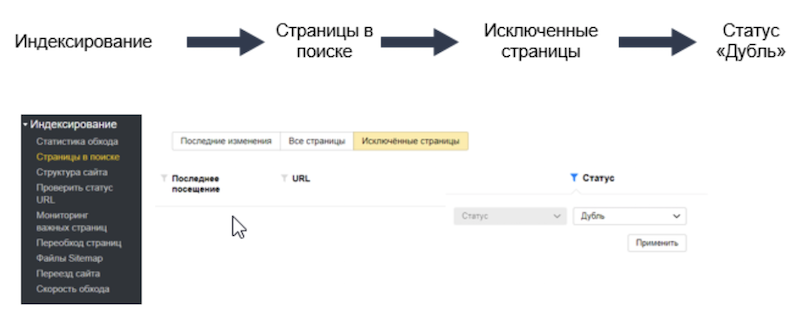

Чтобы их найти, нужно в Яндекс Вебмастере из раздела «Индексирование» перейти в «Страницы в поиске», кликнуть на вкладку «Исключение страницы» и в «Статусе» выбрать «Дубль». Далее проверяем, какие страницы уже были исключены из поиска, так как Яндекс посчитал их дублями.

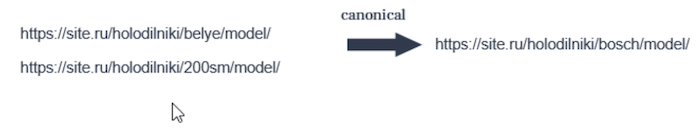

Все страницы с дублирующимися метатегами необходимо проверить. Если страницы действительно являются дублями, то их нужно исправлять — закрывать в robots, настраивать 301 редирект или прописывать атрибут rel= «canonical». Если же страницы не являются дублями и у них просто повторяются мета-теги, то это тоже считается ошибкой, и мета-теги необходимо уникализировать.

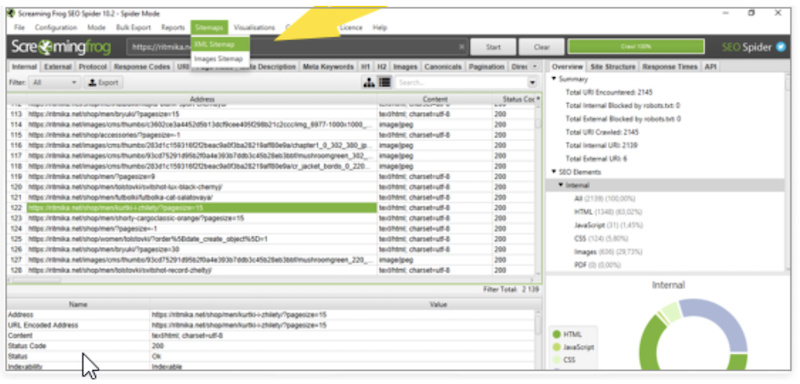

Еще один способ найти страницу с дублями — воспользоваться программой Screaming Frog SEO Spider.(К сожалению, она платная).

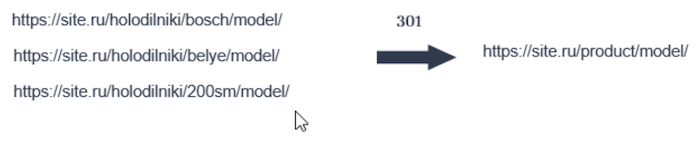

К примеру, с помощью программы обнаружена ошибка: один товар присутствует в нескольких разделах по трем разным URL, которые дублируют друг друга.

3 варианта решения проблемы:

- Привести все страницы к одному URL, настроить 301 редирект, разместив все товары в одном разделе.

- Настроить атрибут canonical на одну из дублирующихся страниц, назначив ее главной.

- Настроить 301 редирект на одну из дублирующихся страниц.

3. Ошибка: не актуализирован sitemap.xml

Согласно справке Яндекса, Sitemap — это файл со ссылками на страницы сайта, который сообщает поисковым системам об актуальной структуре сайта. Он помогает поисковым системам быстро и качественно индексировать интернет-ресурс. Его необходимо обновлять. Например, после того как были внесены изменения в файл robots.txt, нужно внести изменения и в карте сайта.

Как сформировать:

С помощью программы Screaming Frog обновлять и актуализировать карту сайта.

Это не единственный вариант. Существуют специальные плагины, которые автоматически формируют карту сайта.

Плагины для формирования SITEMAP.XML:

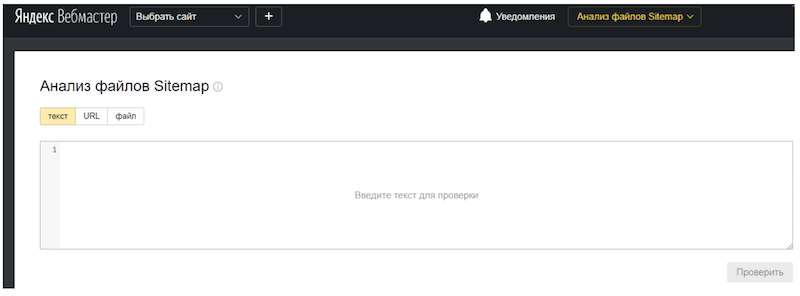

Как проверить:

Провести анализ карты сайта с помощью сервиса Яндекс Вебмастер.

4. Слишком много JavaScript

JavaScript (сокращенно JS) — это популярный язык программирования, который владельцы сайта используют для создания анимации, поп-ап баннеров и других элементов пользовательского интерфейса. Поисковики уже давно говорят, что могут распознавать язык JavaScript, а значит его использование не влияет на продвижение. Однако опыт утверждает другое: у сайта могут возникнуть сложности в продвижении, если он некорректно отображается в индексе.

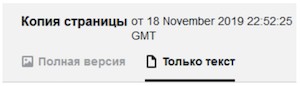

Проверить это несложно. Для этого нужно открыть сохраненную копию сайта в выдаче Яндекса. Кликаем на ссылку «Только текст» и смотрим текстовую копию:

Альтернативный вариант — к полученному url прибавляем «&cht=1». Откроется текстовая версия страницы. Именно так видит страницу робот поисковых систем. Необходимо проверить, все ли элементы сайта отображаются корректно, если нет — исправить ошибки, обратившись к программисту.

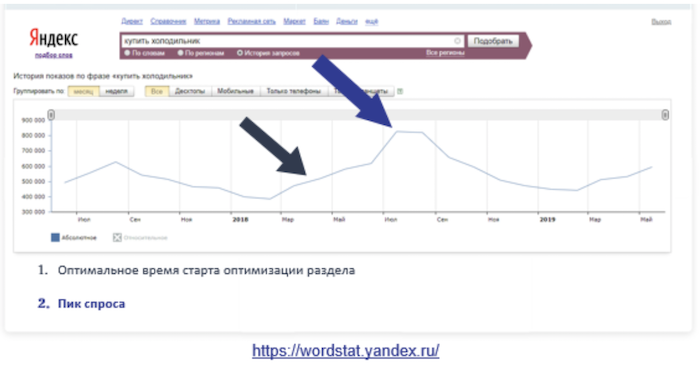

5. Отсутствие приоритета проработки разделов с предстоящим повышением сезонного спроса

Как правило, оптимизация сайта, выкладка доработок на сайт и индексация всех изменений занимает примерно 2 месяца. Получается, что разделы, которые продвигаются по определенным запросам, будут пользоваться наибольшим спросом примерно через 2 месяца после начала SEO-кампании. Вот их и следует продвигать в первую очередь.

Для этого нужно четко понимать, какие разделы будут пользоваться наибольшим спросом через 2 месяца, через 3, 4 и так далее. При этом некоторые из запросов имеют очевидный пик спроса (например, «купить елочные игрушки»), а некоторые — совершенно неочевидны («купить холодильник» с пиком спроса в июле-августе).

Проверить пик спроса можно в Яндекс.Вордстат.

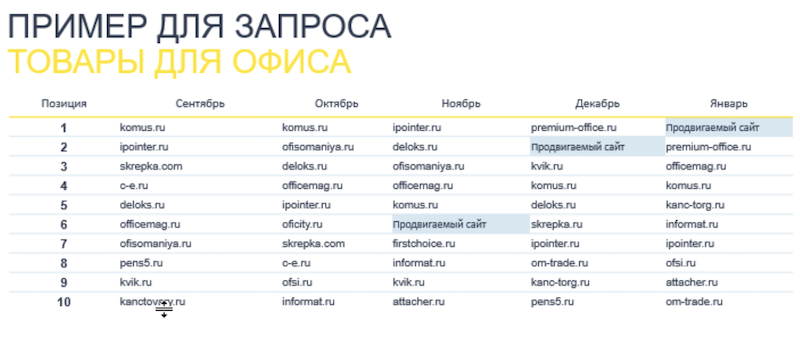

6. Позиции уже в топе — оптимизация не нужна

SEO — процесс постоянный. Поэтому позиция владельца сайта «запросы уже в топе — больше платить за продвижение не надо» не оправдывает себя. Поисковые системы развиваются, появляются новые факторы и алгоритмы ранжирования. Страница сайта из топ-3 через месяц-два может не выдержать конкуренцию с другими ресурсами, которые постоянно развиваются и меняются в соответствии с новыми требованиями пользователей и поисковиков.

К чему приводит такой подход:

- видимость позиций снижается;

- количество продаж при выполнении KPI по SEO становится меньше.

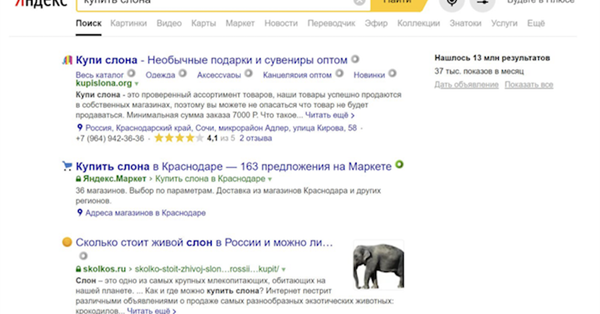

7. Только частотные и конкурентные запросы

SEO-специалисты делят все запросы по частотности — параметру, который показывает, насколько часто запрос вводится в поисковую строку:

- низкочастотные (НЧ);

- среднечастотные (СЧ);

- высокочастотные (ВЧ).

Популярные (высокочастотные) запросы очень важны, и полностью отказываться от них не рекомендуется. Но не ими едиными живет успешное SEO.

Минусы высокочастотных запросов:

- Большое количество рекламных объявлений. Чем популярнее запрос, тем больше компаний хотят по нему рекламироваться.

- Крупные высокобюджетные бренды в выдаче, с которыми трудно конкурировать.

К чему приводит:

- долгий срок вывода запроса в топ поисковика;

- низкий CTR;

- небольшой охват шлейфа;

- высокая стоимость продвижения.

Из минусов логично вытекает следующая ошибка -

8. Нет низкочастотных и микрочастотных запросов

Низкочастотные запросы пользователи вводят в поиск редко, но они тоже важны. Обычно низкочастотные запросы более конкретные и приводят более подготовленную к покупке аудиторию. В семантическом ядре должны присутствовать все варианты по частотности.

Что характерно для низкочастотных и микрочастотных:

- Проще вывести в топ.

- Высокий очищенный спрос (более суженный спрос, количество показов запроса без словосочетаний).

- Высокий CTR. Согласно исследованию сервиса Rookee, у низкочастотного запроса практически всегда выше CTR.

- Высокая конверсия. Человек, который вводит низкочастотный запрос «купить женский красный итальянский пуховик», скорее всего, точно знает, что хочет.

9. Не учитываются запросы, которые уже приносят трафик

Собирая запросы и начиная оптимизировать под них страницы, можно потерять уже наработанный трафик по другим ключевым словам. И сайт начнет терять посетителей.

Чтобы этого не случилось, можно посмотреть в Яндекс.Метрике, по каким фразам страницы сайта уже видны в поиске, выгрузить список текущих трафиконосных запросов, добавить его к подобранным и удалить дубликаты.

10. Кластеризация основана только на логике, а не статистике

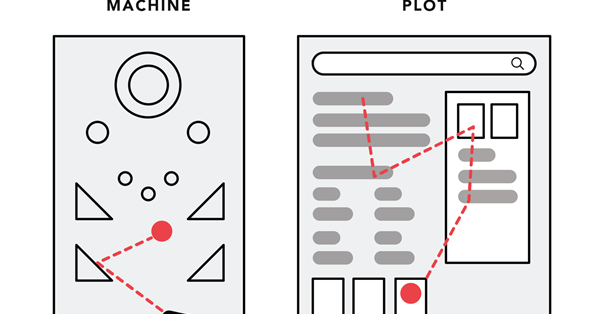

Кластеризацией SEO-специалисты называют группировку запросов по интенту (по намерению пользователя, который вбивает запрос в поисковик).

Группируя запросы по потребностям пользователя, не нужно обращаться к человеческой логике, правильнее и надежнее доверять только сервисам аналитики Яндекса и Google. Пользователи непредсказуемы, а у поисковиков свой взгляд на кластеризацию. Посмотреть выдачу можно с помощью бесплатного сервиса coolakov.ru.

11. Нет и не будет необходимых посадочных страниц

Для каждого запроса необходима посадочная страница. Не можете создать страницу — не подбирайте и не оптимизируйте те или иные запросы.

12. Запрос все время продвигается на одной странице

Идея простая: если у вас есть две подходящие страницы под один запрос и не получается вывести сайт с одной страницы, попробуйте переориентировать этот запрос на другую. Например, не на главную сайта, а на внутреннюю. Возможно, так вы достигнете успеха.

13. Одни и те же продвигаемые страницы и в Яндексе и в Google

Чтобы объяснить причину этой ошибки, необходимо углубиться в историю поиска. Изначально Google был информационно-поисковой системой, Яндекс — коммерческой. Поэтому в Google намного проще найти и отранжировать страничку с достаточно большим текстом, Яндекс же любит и умеет ранжировать коммерческие страницы. Рекомендуется в Google уделять больше внимания информационным страницам, в Яндексе — коммерческим.

14. Незнание Excel

30-40 % работы SEO-специалиста проходит в Excel, поэтому без базовых знаний не обойтись. К примеру с помощью этой программы можно посмотреть количество продвигаемых запросов на страницу, какая из них потенциально принесет больше трафика, осуществлять поиск и удаление дублей.

Базовые формулы для начинающего SEO-специалиста:

- СУММ = СУММ(число1; число2).

- СРЗНАЧ = СУММ(число1; число2).

- СЧЕТ = СЧЁТ(адрес_ячейки1:адрес_ячейки2).

- ЕСЛИ = ЕСЛИ(логическое_выражение; "текст, если логическое выражение истинно; "текст, если логическое выражение ложно").

- СУММЕСЛИ = СУММЕСЛИ(диапазон; условие; диапазон_суммирования).

- СЧЁТЕСЛИ = СЧЁТЕСЛИ(диапазон; условие).

- ДЛСТР = ДЛСТР(адрес_ячейки).

- СЖПРОБЕЛЫ = СЖПРОБЕЛЫ(адрес_ячейки).

- ВПР = ВПР(искомое_значение; таблица; номер_столбца; тип_совпадения).

- СЦЕПИТЬ = СЦЕПИТЬ(ячейка1;" ";ячейка2).

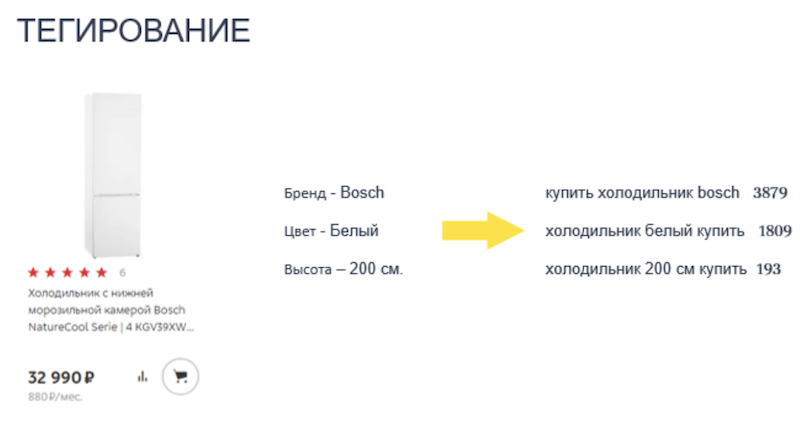

15. Оптимизируем вручную

SEO можно и нужно автоматизировать.Не только с помощью специализированных сервисов, но и используя возможности CMS сайта. Это поможет повысить рентабельность бизнеса и работать быстрее, не теряя при этом в плане качества. Когда в интернет-магазине тысячи товаров, быстро составить мета-описания и Title для каждого из них физически просто невозможно. В таких случаях на первых порах рекомендуем использовать автоматическую генерацию title и description по шаблону.

В некоторых случаях можно автоматизировать тексты в карточках товаров по шаблону, но только на какое-то непродолжительное время, постепенно заменяя их на уникальные описания. Например, такой вид автоматизации практикует сайт «М.Видео».

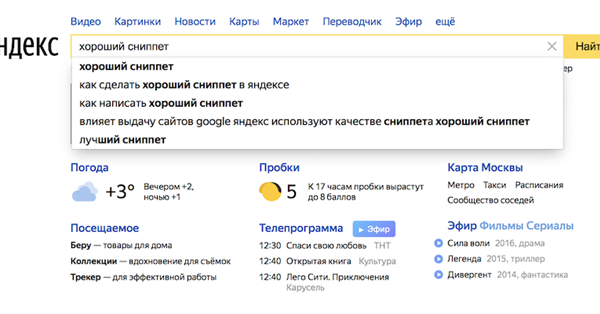

16. Не работаем с CTR

В Яндекс Вебмастере есть возможность проверить CTR. Если мы видим, что он низкий, можно попробовать исправить ситуацию, сделав сниппет привлекательнее. В том числе используя эмодзи и символы с помощью специализированных сервисов:

17. Не вовлекаем пользователя

Поведенческие факторы (ПФ) — одни из самых важных факторов ранжирования. ПФ включают в себя совокупность действий посетителей на сайте, таких как время пребывания, количество просмотренных страниц, регистрация, добавление в закладки, клики на ссылки и т. д.

Вовлекающий контент — это именно то, что улучшает поведенческие факторы. Как вынудить пользователей активно общаться, лайкать и репостить ваши публикации:

- короткие видео;

- генератор случайных скидок;

- анкеты удовлетворенности.

18. Продвигаем только сайт

Яндекс и Google — это часть рынка. Есть другие площадки, на которых можно продвигать свою компанию. Нельзя забывать о том, что любая социальная сеть, любой сервис, электронная площадка имеют свои алгоритмы поиска документов в рамках сайта. Каждая из этих систем может использоваться для продвижения вашего контента в ней.

19. Забываем про коммерческие факторы

Коммерческие факторы ранжирования включают в себя такие понятия, как доверие, качество услуги, удобство выбора и дизайн. Исходя из них пользователь принимает решение, совершить ему целевое действие или нет. Например, зарегистрироваться на сайте, сделать покупку, заполнить форму.

Небольшой перечень того, что должно быть на сайте:

- Страница Контакты

- Страница О компании

- Онлайн-консультант

- Кнопки соцсетей

- Информация об оплате

- Цены

- Гарантия, возврат, отказ

- Отзывы

- Форма заказа товара или услуги

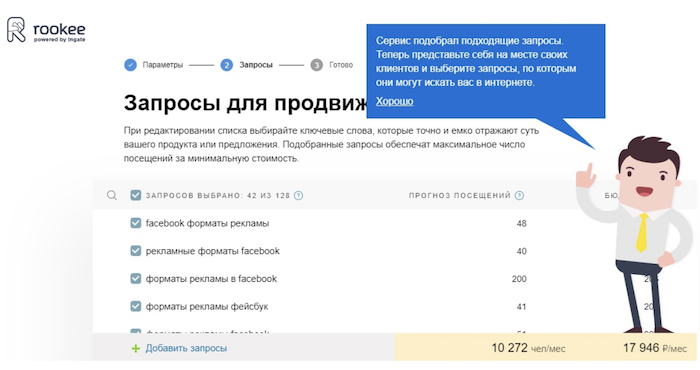

20. Ручной подбор семантического ядра

Собирать СЯ вручную — процесс долгий и трудоемкий. Автоматически и бесплатно составить семантическое ядро можно в сервисе Rookee. Для этого нужно лишь ввести адрес сайта и регион продвижения. Далее система сама подберет наиболее релевантные запросы для сайта и сразу спрогнозирует трафик и бюджет по каждому из них.

Вы можете добавить запросы вручную или из файла, если, к примеру, решите дополнительно использовать сервис SERPSTAT, в котором:

- выбираем конкурентов;

- проверяем конкурентов;

- выгружаем их видимость и видимость сайта;

- удаляем неподходящие запросы;

- проверяем позиции.